This blog is a part of my journey “Embarking on the AWS Solution Architect Associate SAA-CO3 Certification Journey”

Table of Content

- Introduction

- Buckets

- Objects

- Security

- User Policy

- S3 Bucket Policies

- Object Access Control List

- Bucket Access Control List

- Scenarios in Policy Assignment.

- Static Website

- S3 versioning

- S3 Replication

- S3 Storage Class

- S3 Durability

- S3 availability

- General Purpose

- S3 Infrequent Access

- Amazon S3 One Zone – Infrequent Access

- Amazon Glacier Storage

- Amazon S3 Intelligent Tiering

- S3 Storage Class Comparsion

- Moving Between Storage Classes

- Lifecycle rules

- Storage class analysis

- S3 – Requester pays

- S3 Event Notification

- Available Integration with

- S3

- SQS

- Lambda

- Event Bridge

- Available Integration with

- S3 performance

- S3 Select & Glacier Select

- S3 Batch processing

- S3 Encryption

- Amazon S3 Encryption – SSE-S3

- Amazon S3 Encryption – SSE-KMS

- Amazon S3 Encryption – SSE-C

- Amazon S3 Encryption – Client-Side Encryption

- Amazon S3 – Encryption in transit (SSL/TLS)

- Default Encryption vs. Bucket Policies

- S3 Cors

- Amazon S3 – MFA Delete

- S3 Access Logs

- Amazon S3 – Pre-Signed URLs

- S3 Glacier Vault Lock

- S3 Object Lock

- S3 – Access Points

Introduction

- Amazon S3 is one of the main building blocks of AWS.

- It is advertised as “Infinity Scaling” Storage

- Many websites uses Amazon S3 as backbone

- Many AWS service uses Amazon S3 as an integration as well

- Use cases

- Backup & Storages

- Hybrid Cloud Storage

- Data lake

- Diaster Recovery

- 5. Application hosting

- 8. Software delivery

- Archive

- 9. Static Websites

Buckets

- Amazon S3 allows people to store objects (files) in buckets (directories)

- Buckets must have globally qunie name across all the regions and all accounts

- Buckets are defined at regional level

- S3 looks like a global service but buckets are created in a region

- Bucket naming conventions

- No upper case, No underscore

- 3-63 characters long

- Not an IP

- Must start with lowercase letter of number

- Must not start with prefix xn--

- Must not end with prefix -s3alias

Objects

- Objects (files) have a key

- The key is the fullpath of the object

- s3://my-bucket/m_file.txt

- s3://my-bucket/my-folder/my-file.txt

- The key os compose of prefix + object name

- There is no concept of directories with buckets

- Object values are the content of the body

- Max object size is 5TB (5000GB)

- If uploading more than 5 GB, must use multi-part upload

- Metadata (list of key/value pairs of system or user data)

- Tags(Unicode key/value pair upto 10) – useful for security lifecycle

- Objects can be versioned

Security

A very critical point as far as security is concered which weigh 30% of your exam.

- User-Based.

- IAM Policies – Which API calls should be allowed for a specific user from IAM.

- Resource Based

- Bucket Policies, bucket wide rules from the S3 console – allow cross account

- Object Access Control List (ACL) – finer grain (can be disabled)

- Bucket Access Control List – less common can be disabled.

- Note: An IAM principal can access an S3 object if

- The user IAM policy allows it or the resource policy allows it.

- and there is not explicit DENY

- Encryption: Objects can be encrypted in Amazon S3 using encryption keys

- S3 Bucket Policies

- JSON based policies, which contains

- Resource: Buckets and Objects

- Effect: Allow/Deny/

- Actions: Set of API to Allow or Deny

- principal: The account or user to apply the policy to.

- Use S3 bucket policy to

- Grant public access to bucket

- Force Object to be encrypted at upload

- Grant access to another account

- JSON based policies, which contains

Scenarios in Policy Assignment.

- Public Access – Use Bucket Policy

- User Access to S3 – IAM permission

- EC2 instance access -> EC2 Instance Role with IAM permission

- Cross Account – Bucket Policy

- Block Public Access -> Provided by AWS while creating the bucket

1. This setting was created to prevent company data leaks

2. If you know your bucket should never be public leave these on.

3. Can be set at account level

Website Overview – Static Website

- S3 can host static websites and have them accessible on the internet

- The website url will be depending on the region

http://bucket-name.s3-website.aws-region.amazonaws.com - If you get 403 forbidden error, make sure the bucket policy allows public read.

S3 versioning.

- You can version your files in Amazon S3.

- It is enabled at bucket level

- Same key overwrite will change version

- It is best practice to version your buckets

- Protects against unintended deletes

- Easy Rollback to previous version

- Notes:

- Any file that is not versioned prior to enabling versioning will have version as null.

- Suspending the versioning does not delete the previous versions.

S3 Replication

- Must enable versioning in source and destination buckets

- There are two types of replication

- Cross Region Replication (CRR)

- Same Region Replication (SRR)

- Buckets can be in different AWS accounts

- Copying is asynchronous

- Must give proper IAM permisiion to S3.

- Use cases

- CRR -> Compliance, lower latency access, replication across accounts.

- SRR -> log aggregation, live replication between prod and test account

- After you enable Replication, only new objects are replicated.

- Optionally, you can replicate existsing object using S3 Batch Replication, it replicaates existing object and objects that failed replication.

- For Delete Operation

- Can replicate delete markers from source to target (optional setting)

- Deletion with a version Id are not replicated (To avoid malicious delete)

- There is no chaining of replications.

- If a bucket 1 has replication 2, and 2 to 3. Then object created on 1 won’t get created on 3. Not transitive replication

S3 Storage Class

There are different types of storage classes in S3, let’s explore them.

- Amazon S3 Standard-General Purpose

- Amazon S3 Standard – Infrequent Access (IA)

- Amazon S3 One Zone – Infrequency Access

- Amazon S3 Glacier Instant Retrival

- Amazon S3 Glacier Flexible Retrival

- Amazon S3 Glacier Deep Archive

- Inteligent Tiering

We can move objects between these classes manually or using S3 lifecycle configurations.

S3 Durability

- High Durability (99. 11 9s) of object across multiple AZ

- If you store 10 millions object with Amazon S3, you can on average expect to icur a loss of single object once every 10 thousand years

- Same for all storage classes

S3 availability

- availability measure how readily a sservice is available

- Varies depending on storage classes

- Example S3 standars has 99.99% availability i.e not available for 53 minutes a year

General Purpose

- 99.99% availability

- Used for frequent access data

- Low latency and high throughput

- Sustain 2 concurrent failures

- Use cases: Big Data analytics, mobile & gaming applications, content distribution

S3 Infrequent Access

- For data that is less frequently accessed, but requires rapid access when needed

- Lower cost than S3 standard.

- 99.9% availability

- Use cases: Diaster Recovery, Backups

Amazon S3 One Zone – Infrequent Access (S3 One zone-IA)

- High Durability in a single AZ, data will be lost when AZ is destroyed.

- 99.5% availability

- Use cases: Storing Seconary Backup, copies of on premise data or data you can recreate

Amazon Glacier Storage

- Low cost storage meant for archive and Backups

- Pricing Model: price for storage + object reterival cost

- Amazon S3 Glacier Instant reterival

- Milliseconds reterival, great for data accessed once a quarter

- Minimum Storage duration of 90 days.

- Amazon S3 Glacier Flexible reterival

- Expedited (1 to 5 minutes) to reterive.

- Standard (3 to 5 hours)

- Bulk(5 to 12 hrs)

- Minimum Storage duration of 90 days.

- Amazon S3 Glacier Deep Archive – for long term storage

- Standard (12 hours), Bulk (48 hours)

- Minimum Storage days 180 days

- Amazon S3 Glacier Instant reterival

Amazon S3 Intelligent Tiering

- Small Monthly monitoring and auto Tiering fee.

- Moves objects automatically between access tier based on usage.

- There are no reterival charges in S3 Intelligent Tiering

- Types

- Frequent Access tier (automatic): Default tier

- Infrequent Access Tier(automatic): Objects not accessed for 30 days.

- Archive Instant Access Tier(automatic): Objects not accessed for 90 days.

- Archive Access Tier(optional): Configured from 90 days to 700 days.

- Deep Archive Access Tier(optional): From 180 days to 700 days

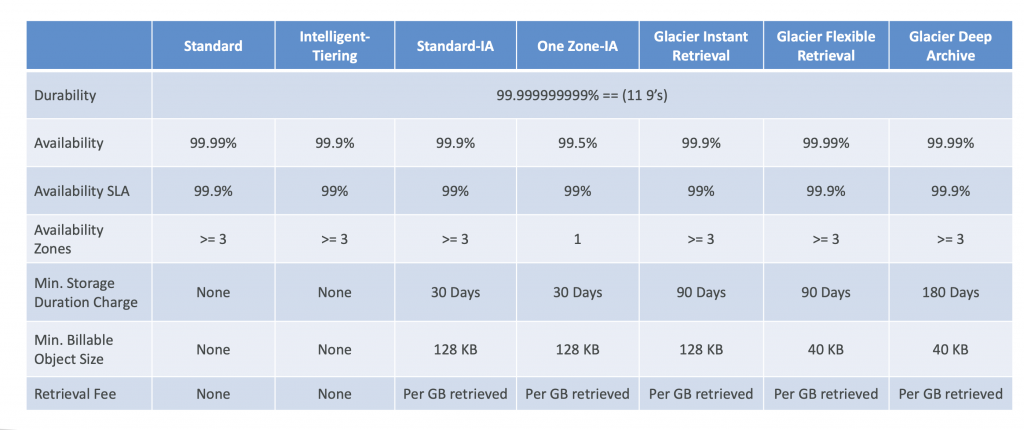

Comparsion

Moving Between Storage Classes

- you can transition object between storage classes.

- For infrequent accessed objects, move them to standard IA

- For archive objects that you don’t need fast access to, move them to glacier or Glacier Deep Archive

- Moving object can be automated using Life cycle rules.

Lifecycle rules

- Transition Actions – Configure objects to transition to another storage class.

- Move objects to standard IA class 60 days after creation.

- Move to glacier for archive after 6 months.

- Expiration Actions -> Configure object to expire (delete) after some time.

- Access Log files can be set to delete after 365 days.

- Can be used to delete old version of files.

- Can be used to delete incomplete multi-parts uploads.

- Rules can be created for certain prefix.

- Rules can be created for certain object tags.

Storage class analysis

- Help you to decide when to transition object to right storage class.

- Recommendation for Standard and Standard IA. Does not work for One Zone IA or Glacier

- Reports is updated daily.

- 24 to 48 hrs to start seeing data analysis.

- Good first step to put together lifecycle rules can improve them.

S3 – Requester Pays

- In General, bucket owners pay for all Amazon S3 storage, data transfer associated with the bucket.

- With Requester pays bucket, the requester instead of the bucket owner pays the cost of the request and the data downloaded from the bucket.

- Helpful, when you wanna share large data set with another account.

- The Requester must be authenticated in AWS and cannot be anonymous.

S3 Event Notification

- Events like Object created, S3:objectRemoved, s3:ObjectRestore, s3:Replication

- Object name filtering possible.

- Use case: Generate thumbnails of images uploaded to S3.

- Can create as many “S3 events as desired”

- S3 events notification typically delivers events in seconds but can sometimes take a minute or longer.

Available Integration with

- SNS

- SQS

- Lambda

- Amazon Event bridge

- Advance Filtering option with JSON rules (metadata, object size, name)

- Multiple destination – Step Functions, Kiness Streams, Kiness Firehose

- Capabilities -> Archive, Replay Events, Reliable delivery

S3 performance

- Amazon S3 automatically scales to high request rates, latency around 100-200 ms

- Your application can achieve at least 3,500 PUT/COPY/POST/DELETE or 5,500 GET/HEAD requests per second per prefix in a bucket.

- There are no limits to the number of prefixes in a bucket.(object path => prefix)

- If your spread your read across all prefixes you can achieve high request per second for Get and PUT.

- How to optimize Write.

- Multi part upload

- recommended for files > 100MB, must use for files > 5GB

- Can help parallelize uploads (speed up transfers)

- S3Transfer Acceleration

- Increase transfer speed by transferring file to an AWS edge location which will forward the data to the S3 bucket in the target region

- Compatible with multi-part upload

- Multi part upload

- Read Optimization

- S3 Byte-Range Fetches

- Parallelize GETs by requesting specific byte ranges

- Better resilience in case of failures

- Can be used to speed up downloads

- Can be used to retrieve only partial data (for example the head of a file)

- S3 Byte-Range Fetches

S3 Select & Glacier Select

- Retrieve less data using SQL by performing server-side filtering

- Can filter by rows & columns (simple SQL statements)

- Less network transfer, less CPU cost client-side

S3 Batch processing

- Perform bulk operations on existing S3 objects with a single request, example:

• Modify object metadata & properties

• Copy objects between S3 buckets

• Encrypt un-encrypted objects

• Modify ACLs,tags

• Restore objects from S3 Glacier

• Invoke Lambda function to perform custom action on each object - A job consists of a list of objects, the action to perform, and optional parameters

- S3 Batch Operations manages retries, tracks progress, sends completion notifications, generate reports

- You can use S3 Inventory to get object list and use S3 Select to filter your objects

S3 Security

- You can encrypt objects in S3 buckets using one of 4 methods

- Server-Side Encryption (SSE)

- Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3) – Enabled by Default

- Encrypts S3 objects using keys handled, managed, and owned by AWS

- Server-Side Encryption with KMS Keys stored in AWS KMS (SSE-KMS)

- Leverage AWS Key Management Service (AWS KMS)to manage encryption keys

- Server-Side Encryption with Customer-Provided Keys (SSE-C)

- When you want to manage your own encryption keys

- Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3) – Enabled by Default

- Client-Side Encryption

- It’s important to understand which ones are for which situation for the exam

Amazon S3 Encryption – SSE-S3

- Encryption using keys handled, managed, and owned by AWS

- Object is encrypted server-side

- Encryption type is AES-256

- Must set header “x-amz-server-side-encryption”: “AES256”

- Enabled by default for new buckets & new objects

Amazon S3 Encryption – SSE-KMS

- Encryption using keys handled and managed by AWS KMS (Key Management Service)

- KMS advantages: user control + audit key usage using CloudTrail

- Object is encrypted server side

- Must set header “x-amz-server-side-encryption”: “aws:kms”

- Limitation

- If you use SSE-KMS, you may be impacted by the KMS limits

- When you upload, it calls the GenerateDataKey KMS API

- When you download, it calls the Decr ypt KMS API

- Count towards the KMS quota per second (5500, 10000, 30000 req/s based on region)

- You can request a quota increase using the Service Quotas Console

Amazon S3 Encryption – SSE-C

- Server-Side Encryption using keys fully managed by the customer outside of AWS

- Amazon S3 does NOT store the encryption key you provide

- HTTPS must be used

- Encryption key must provided in HTTP headers, for every HTTP request made

Amazon S3 Encryption – Client-Side Encryption

- Use client libraries such as Amazon S3 Client-Side Encryption Library

- Clients must encrypt data themselves before sending to Amazon S3

- Clients must decrypt data themselves when retrieving from Amazon S3

- Customer fully manages the keys and encryption cycle

Amazon S3 – Encryption in transit (SSL/TLS)

- Encryption in flight is also called SSL/TLS

- Amazon S3 exposes two endpoints:

- HTTP Endpoint – non encrypted

- HTTPS Endpoint – encryption in flight

- HTTPS is recommended

- HTTPS is mandatory for SSE-C

- Most clients would use the HTTPS endpoint by default

Default Encryption vs. Bucket Policies

- SSE-S3 encryption is automatically applied to new objects stored in S3 bucket

- Optionally, you can “force encryption” using a bucket policy and refuse any API call

- to PUT an S3 object without encryption headers (SSE-KMS or SSE-C)

S3 Cors

- Cross-Origin Resource Sharing (CORS)

- Origin = scheme (protocol) + host (domain) + port

- example: https://www.example.com (implied port is 443 for HTTPS, 80 for HTTP)

- Web Browser based mechanism to allow requests to other origins while visiting the main origin

- Same origin: http://example.com/app1 & http://example.com/app2

- Different origins: http://www.example.com & http://other.example.com

- The requests won’t be fulfilled unless the other origin allows for the requests, using CORS Headers (example: Access-Control-Allow-Origin)

- If a client makes a cross-origin request on our S3 bucket, we need to enable the correct CORS headers

- It’s a popular exam question

- You can allow for a specific origin or for * (all origins)

Amazon S3 – MFA Delete

- MFA (Multi-Factor Authentication) – force users to generate a code on a device (usually a mobile phone or hardware) before doing important operations on S3

- MFA will be required to:

- Permanently delete an object version

- Suspend Versioning on the bucket

- MFA won’t be required to:

- Enable Versioning

- List deleted versions

- To use MFA Delete, Versioning must be enabled on the bucket

- Only the bucket owner (root account) can enable/disable MFA Delete

S3 Access Logs

- For audit purpose, you may want to log all access to S3 buckets

- Any request made to S3, from any account, authorized or denied, will be logged into another S3 bucket

- That data can be analyzed using data analysis tools…

- The target logging bucket must be in the same AWS region

- Do not set your logging bucket to be the monitored bucket

- It will create a logging loop, and your bucket will grow exponentially

Amazon S3 – Pre-Signed URLs

- Generate pre-signed URLs using the S3 Console, AWS CLI or SDK

- URL Expiration

- S3 Console – 1 min up to 720 mins (12 hours)

- AWS CLI – configure expiration with –expires-in parameter in seconds (default 3600 secs, max. 604800 secs ~ 168 hours)

- Users given a pre-signed URL inherit the permissions of the user that generated the URL for GET / PUT

- Examples:

- Allow only logged-in users to download a premium video from your S3 bucket

- Allow an ever-changing list of users to download files by generating URLs dynamically

- Allow temporarily a user to upload a file to a precise location in your S3 bucket

S3 Glacier Vault Lock

- Adopt a WORM (Write Once Read Many) model

- Create a Vault Lock Policy

- Lock the policy for future edits (can no longer be changed or deleted)

S3 Object Lock

- Versioning must be enabled

- Adopt a WORM (Write Once Read Many) model

- Block an object version deletion for a specified amount of time

- Retention mode – Compliance:

- Object versions can’t be overwritten or deleted by any user, including the root user

- Objects retention modes can’t be changed, and retention periods can’t be shortened

- Retention mode – Governance:

- Most users can’t overwrite or delete an object version or alter its lock settings

- Some users have special permissions to change the retention or delete the object

- Retention Period: protect the object for a fixed period, it can be extended

- Legal Hold:

- protect the object indefinitely, independent from retention period

- can be freely placed and removed using the s3:PutObjectLegalHold IAM permission

S3 – Access Points

- Access Points simplify security management for S3 Buckets

- Each Access Point has:

- its own DNS name (Internet Origin or VPC Origin)

- An access point policy (similar to bucket policy) – manage security at scale

- We can define the access point to be accessible only from within the VPC

- You must create aVPC Endpoint to access the Access Point (Gateway or Interface Endpoint)

- The VPC Endpoint Policy must allow access to the target bucket and Access Point